In today’s data-driven world, organisations are increasingly drawn to the transformative potential of AI (Artificial Intelligence) and ML (Machine Learning) technologies, from Large Language Models (LLMs) to Computer Vision. However, realising this potential often hinges on addressing the foundational challenges in data engineering. In this article, we’ll explore the Data Analytics Hierarchy of Needs and discuss DataOps as a key methodology for streamlining data analytics processes, unlocking efficiency, and driving innovation.

Understanding Data Analytics Maturity

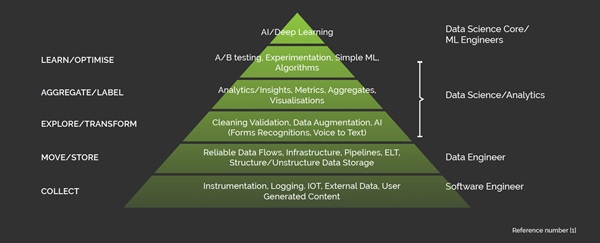

The Data Analytics Hierarchy of Needs offers a framework for assessing an organisation’s data analytics maturity. It illustrates that most organisations operate within the bottom four levels, with only mature analytics teams reaching the top two levels.

The hierarchy progresses through stages like data collection, integration, analytics, and automation. Climbing this hierarchy enhances data analytics capabilities, enabling informed decision-making and fostering innovation.

Data management and engineering challenges can include issues such as data silos, diverse data formats, data errors, validation, manual maintenance, and the influx of data tools and platforms.

Paving the Way to DataOps: Unlocking Efficiency & Innovation

DataOps, often considered a buzzword, is more than just a trend; it’s a methodology that can transform data analytics processes. Let’s delve into the core principles of DataOps and its relevance to data analytics.

DataOps encompasses tools, methods, and a cultural shift that focuses on delivering consistent value to business stakeholders. It integrates agile development methods, DevOps automation, and Statistical Process Control (SPC) into data analytics problem-solving.

Agile Development Methods

Agile development aligns with the need for ongoing business improvement and innovation. It emphasises self-organising, cross-functional teams, which is closely aligned with data analytics environments.

DevOps Automation

DevOps automation empowers analytics teams to manage delivery pipelines more efficiently, harnessing infrastructure automation for continuous insights and rapid adaptation to technological changes.

Statistical Process Control (SPC)

SPC, initially designed by Walter A. Shewhart in the early 1920s for manufacturing quality control, is applied to data analytics by introducing automated checks and measurement tests into the data pipeline, enhancing quality and efficiency.

DataOps Empowers Data Analytics Teams To:

- Reduce time to insight.

- Improve analytic quality.

- Lower the marginal cost of asking the next business question.

- Boost team morale by moving beyond hope, heroism, and caution.

- Promote efficiency through agile processes, reuse, and refactoring.

Best Practices in DataOps

Best practices in DataOps centre around fostering a culture of continuous customer focus, prioritised teamwork, embracing change, keeping it simple, and implementing continuous integration. These principles enable organisations to unlock the true potential of their data analytics processes.

Implementing DataOps in Your Data Analytics Pipeline

In the final section of our discussion, we explore specific best practices for implementing DataOps processes in data analytics pipelines. We delve into automated testing, version control, multiple environments, the Medallion Architecture, configuration management, and Data Infrastructure as Code. By adopting these methodologies, organisations can ensure that their data analytics endeavours are not only efficient, but also future proof.

Embrace DataOps for Excellence

As organisations pursue data analytics excellence, DataOps emerges as a pivotal methodology. It streamlines processes, improves quality, and drives innovation.

For organisations seeking to enhance their data management and data analytics pipelines, here are some best practices inspired by DataOps:

- Automated Testing – Continuous monitoring of data values within the pipeline. Implementation of regression testing in the CI pipeline.

- Version Control – Adoption of version control systems like Git for code tracking.

- Using Multiple Environment – Encouraging team members to work in isolated feature branches. Consideration of the Medallion Architecture for multi-environment collaboration.

- Medallion Architecture – Classification of data into raw, curated, and enriched zones for efficient data management.

- Adding Configuration to Your Pipelines – Incorporation of runtime configuration changes for flexibility. Use of configuration files and environment variables to streamline adjustments.

- Data Infrastructure as Code – Implementation of Data Infrastructure as Code (IaC) to manage and provision infrastructure resources efficiently.

Improve Your Data Analytics Pipeline with DataOps

With DataOps, organisations can transform their data analytics pipelines into agile, efficient, and consistent systems. By implementing these best practices, they can reduce time to insight, improve analytic quality, lower costs, enhance team morale, and promote efficiency in their data analytics endeavours. Embracing DataOps is essential for staying ahead in the ever-evolving landscape of data management and analytics, making the most of valuable data assets.

As you embark on your journey toward data analytics excellence, it’s clear that DataOps is a pivotal methodology. To learn more about implementing these practices, access our eBook, “Solving the Data Analytics Best Practice Puzzle”.